Australian and Swedish chapters launched - PauseAI May Newsletter

Announcing PauseCon London, and the launch of two new national chapters.

Welcome to the May edition of the PauseAI newsletter. With springtime well underway for those of us in the Northern Hemisphere, I hope you’re all enjoying the sun!

As part of our newsletter revamp, you’ll get information on organisational updates, upcoming events, and the latest news on frontier AI regulation.

PauseCon London

This month, we were happy to announce PauseCon London, the first PauseAI conference. Across a range of talks, workshops, and panel discussions, PauseCon attendees will have the opportunity to learn about AI governance, community building, and digital organising.

We’ll be joined by:

Joep Meindertsma, Founder of PauseAI

Connor Leahy, CEO of Conjecture

Rob Miles, YouTuber

Kat Woods, Founder of Nonlinear and Charity Entrepreneurship

David Krueger, Assistant Professor at the University of Montreal

Tara Steele, Director of The Safe AI for Children Alliance

PauseCon London will take place from the 28th-30th of June, with a social evening on Friday the 27th. We can provide accommodation in London for up to 50 attendees, so make sure you apply in time to reserve your spot if you’ll be travelling from outside London. Applications are still open here: https://pausecon.org/

PauseCon will end with our largest ever protest to date on Monday the 30th. Note that it’s not required for you to attend PauseCon in order to come along to the protest. Please register on our separate Luma event whether you’ll be attending PauseCon or not: https://lu.ma/bvffgzmb

Australian and Swedish national chapters launched

To add to our eight existing national chapters, we now have groups in Australia and Sweden.

We were in Stockholm as part of our last international protest calling for safety to be the focus of the Paris AI Action Summit. Swedish news outlet SvD Näringsliv interviewed organiser Jonathan Salter (pictured below), who is now the head of PauseAI Sweden.

The launch of PauseAI Australia comes as elections are due to be held on the 3rd of May. Australians For AI Safety have designed a great tool that allows voters to compare each party’s stance on AI safety.

Our Australian chapter will be jointly led by Mark Brown and Michael Huang, who organised the Paris AI Action Summit protest in Melbourne (pictured below). You can get in touch with them here.

All of our national chapters are moving to a regular recruitment-social-lobbying schedule, to encourage local growth and provide simple yet effective actions for volunteers to engage with.

If you’re interested in establishing a new national chapter in your own country, please get in touch with Ella, our organising director.

EU asking for feedback on frontier AI regulation

The European Union is inviting AI companies, academia, nonprofits and private citizens to provide feedback on the provisions for general-purpose AI systems under the EU AI Act. PauseAI is preparing an official response, but we encourage readers with relevant knowledge to submit their own here. The deadline for submissions is the 22nd of May.

Maxime Fournes appears on Le Futurologue Podcast

The director of our French chapter, Maxime Fournes, has continued his streak of podcast appearances, going on Le Futurologue Podcast to discuss the urgency of an international treaty to pause frontier AI development. At 160,000 views and rising, the video is now the most popular on Le Futurologue’s channel.

Social media

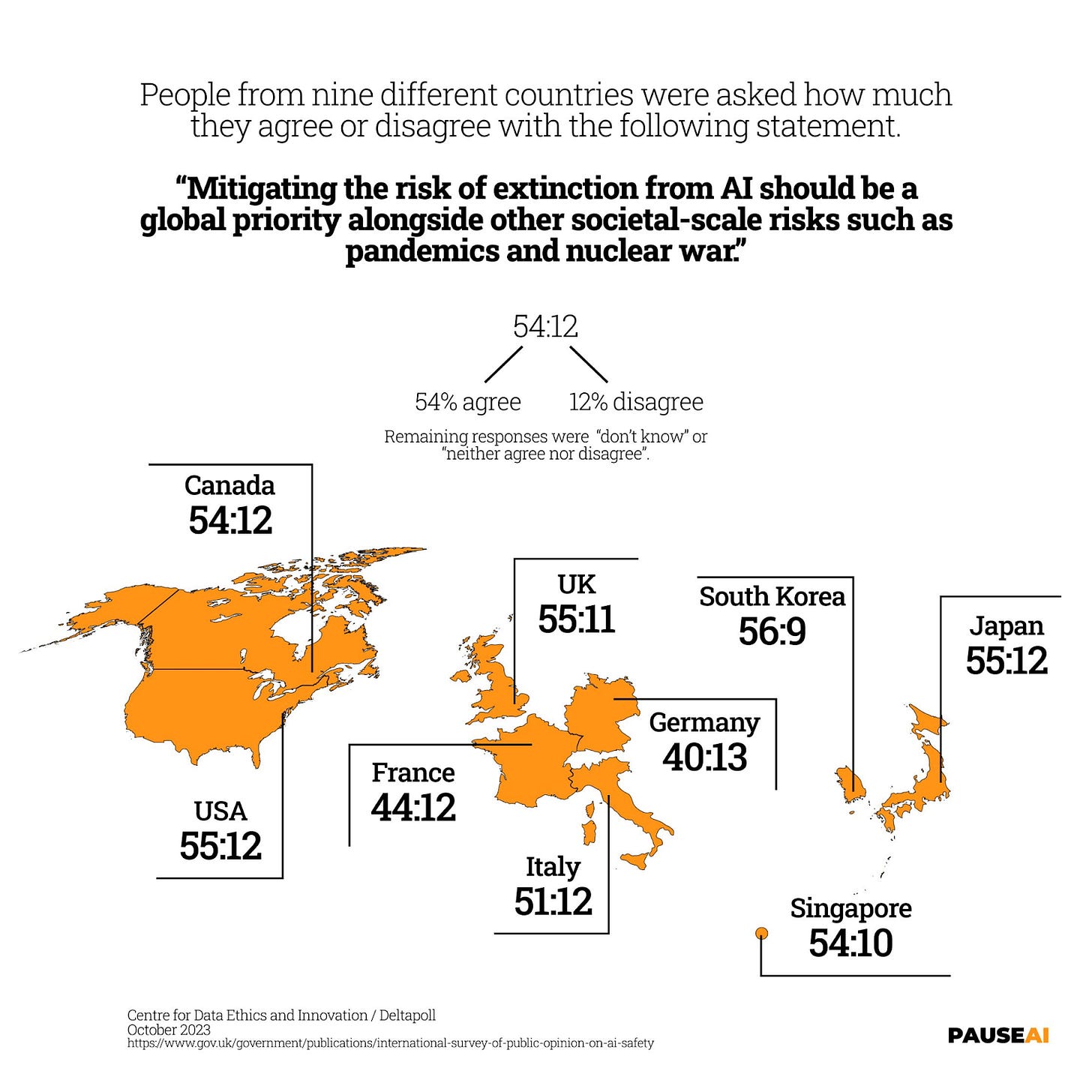

We shared this poll commissioned by the UK government in 2023 across our social media channels, which found widespread international support for the Center for AI Safety’s statement on the risk of extinction posed by AI.

It’s yet more evidence that the public understands the extinction threat posed by increasingly powerful and uncontrollable AI, and they want their governments to do something about it.

If you’re on Threads, you can now give PauseAI a follow here.

AI news

The UK AI Security Institute released a report detailing their new benchmark for autonomous replication capabilities in AI systems. Whilst the models at the frontier of AI development today are capable of performing several tasks necessary to threaten autonomous replication, they are still lacking in a few important areas. But, AISI clearly states that, as AI companies continue to make increasingly powerful and competent models, dangerous autonomous replication capabilities “could soon emerge” in the coming years.

OpenAI whistleblower Daniel Kokotajlo (along with blogger Scott Alexander and a group of forecasters) wrote AI 2027, a scenario detailing a plausible path for AI development and governance over the next two years. It’s a great read, and could help people to internalise the consequences of exponential growth in AI capabilities. It predicts the public coming to view AI as one of the most important problems in the world, and the approval rating of AI companies plummeting as automated AI research brings many technologies currently in the realm of science fiction into the realm of reality. Ultimately, the reader can choose the “slowdown” or the “race” option, one of which ends in smarter-than-human AI that remains under human control and acts in our interests, the other in human extinction. The slowdown does not come into place until 2027 in the scenario, which, in the real world, may be too late.

Nobel laureate Geoffrey Hinton once again appeared on CBS to discuss his views on the threat of humanity losing control to AI systems that are more intelligent than we are, and why he thinks that if we continue on our current trajectory, it’s “going to happen”. He concluded that “we have to have the public put pressure on governments to do something serious about it.”

The Executive Director of the Future of Life Institute, Anthony Aguirre, launched the Keep the Future Human campaign, where he proposes “hard limits on computational power” to stop the unwinnable race to artificial general intelligence. He stresses the technical feasibility of regulatory measures in AI chip production, such as hardware-enforced licensing, network restrictions, and geolocation. Keep the Future Human makes it clear that we can choose to not build uncontrollable smarter-than-human general AI, and instead reap the benefits of controllable tool AI.

Thanks for reading!