From Saviour Startup to Shoggoth: OpenAI’s History reflects the battle for the soul of AI

Guest post by Aslam Husain, PauseAI volunteer and Communications Team member

There’s a viral meme shared in artificial intelligence circles: a hideous, slithering octopus, with a happy face at the end of its tentacle. The meme is based on H.P. Lovecraft’s monster the Shoggoth, and has come to represent the dangers lurking underneath the cheery exterior of powerful chatbots like ChatGPT. Though the most powerful AI company today has come under increasing scrutiny for its products’ ability to mass produce fake news, entrench biases and potentially wipe out humanity, OpenAI was born to protect humanity from a world beset by these very harms.

Imagine, instead of the Shoggoth, a non-profit AI company dedicated to building safe AI tools for everyone rather than shareholders. Imagine a company that seeks to freely collaborate with others across all sectors. Imagine an institution founded by 11 luminaries of the tech sector who collectively agree to develop AI in the safest way possible for the benefit of all humanity. Imagine a company like that.

Back in 2015, this was nearly verbatim how OpenAI, the current for-profit titan of AI, introduced itself to the world.

How many of those original, noble principles remain?

None:

Built for everyone?

OpenAI is now for-profit and it distributes its products through paid subscriptionsEncourage researchers to publish work?

Employees have been muzzled by non disclosure agreements and have made whistleblower complaints to the Securities and Exchange CommitteeFreely collaborates with other sectors?

Lawsuits have been field against OpenAi for theft of intellectual propertyUntethered to tech monopolies?

The FTC is pursuing an antitrust investigation into the relationship between Microsoft, Nvidia and OpenAIBuild AI safely?

The safety team has been disbanded.

How many of the 11 founding members remain? Just 3: Sam Altman, Greg Brockman, who recently left on sabbatical, and Wojciech Zaremba. Only 1 of the original board directors remains: Quora CEO Adam D’Angelo. And how many of OpenAI’s safety team still work at the company? None.

What happened? How did OpenAI become the opposite of what it set out to be in just 8 years?

Let’s take a look at a short history of OpenAI.

2015 - The “Good Guys” and the Fate of Humanity

The Rosewood Sandhill Hotel in Menlo Park, California. Photo: rosewoodhotels.com

In the summer of 2015, Sam Altman, then president of Y Combinator, brokered a meeting between Tesla CEO Elon Musk and some of the brightest minds in artificial intelligence. The meeting took place in a private room in Menlo Park, California, at the grand Rosewood Sandhill Hotel – just a twenty minute drive from Google headquarters. Among those gathered were Altman, Musk, Daniel Amodei and Ilya Sutskever (both AI researchers at Google), Greg Brockman (CTO of Y Combinator startup Stripe), Chris Clark (director at Altman’s first company Loopt), and Paul Christiano (an AI researcher).

At the time, most AI scientists worked either in academia or under the protective wing of Big Tech, where salaries exceeded the “the cost of a top NFL quarterback”. Google, as ever, seemed to be dominating the field, having recently acquired the London artificial intelligence company DeepMind for a cool half billion. Google’s acquisition nullified an earlier investment in the company made by Musk back in 2012.

Musk and Altman shared an interest in keeping AI out of the hands of the “bad guys”, and also shared a track record for growing startups into massively successful companies. During his tenure at Y Combinator, Altman had helped usher in some of Silicon Valley’s biggest names, including Dropbox, DoorDash, Reddit, and AirBnb. Altman’s boss, Paul Graham, once remarked about him, “You could parachute him into an island full of cannibals and come back in 5 years and he'd be the king.”

There was obvious chemistry at the meeting. According to Brockman, they discussed “the state of the field, how far off human-level AI seemed to be, what you might need to get there, and the like. The conversation centred around what kind of organisation could best work to ensure that AI was beneficial. It was clear that such an organisation needed to be a non-profit, without any competing incentives to dilute its mission.”

All the conditions necessary for a company to safely develop AI seemed to embody a single theme: openness.

2015 - A radical new company, OpenAI launches

Image: openai.com

Later that December, OpenAI launched with 1 billion dollars pledged from Altman, Brockman, Musk, and big names in venture capital, including Peter Thiel, the co-founder of Paypal and Palantir; Reid Hoffman, the founder of LinkedIn; Jessica Livingston, co-founder of Y Combinator; and hefty contributions from Amazon and Infosys.

In a bid to tempt back some of the talent OpenAI had poached, Google and Meta offered researchers a “borderline crazy” amount of money. OpenAI could not match the cash, but it didn’t need to – it offered an opportunity Big Tech could not: to work at a company where research wasn’t beholden to a bottom line. This was going to be a radically different kind of company. Altruistic. Open sourced. Research driven. And for young, idealistic AI scientists like Wojciech Zaremba, that opportunity couldn’t be quantified in dollars and cents. In Zaremba’s own words, “OpenAI was the best place to be.”

In its first year, OpenAI focussed on building tools from existing machine learning (ML) algorithms without making any advancements in the field. It released its first product, OpenAI Gym, a Python toolkit for teaching AI agents how to perform actions like playing Pong or Go. Despite the excitement at its launch, OpenAI seemed to have contributed little to the field. Even in the esoteric ecosphere of ML, OpenAI remained a backwater. The New Yorker reported that in the summer of 2016, Dario Amodei, who had chosen to remain with Google after that initial meeting at the Rosewood Hotel, visited OpenAI to tell them that no one knew what they were doing. “What is the goal?”, he asked. Brockman responded, “Our goal right now… is to do the best thing there is to do. It’s a little vague.”

2016 - Google takes the lead with DeepMind AI Breakthrough

Fan Hui struggles against Google DeepMind’s AlphaGo. Image: YouTube

Everything changed when Google’s AI AlphaGo beat three-time European Go Champion Fan Hui. What many computer scientists thought possible only in another 100 years was achieved with an artificial neural network trained on data from 100,000 games. What made AlphaGo’s victory significant was that it had learned to play Go not from explicit instructions, but from making connections in its training data. The implication was huge; this approach could unfetter artificial intelligence from narrow domains like board games and allow AIs to perform potentially any task given enough data..

OpenAI took notice, wagering that AlphaGo’s approach, which focussed on data and processing power rather than algorithmic innovation, was the future. The approach came with an added benefit; while advances in algorithmic innovation were unpredictable at best, OpenAI could reliably project improvements to processing power. And acquiring data was simple, provided you had deep enough pockets. The resources of data and processing power, collectively known as compute, would become the commodity that realised frontier expanding AI. OpenAI calculated that the amount of compute used in the largest AI training runs had been “increasing exponentially with a 3.4-month doubling time… Since 2012, this metric has grown by more than 300,000x”.

But how could a non-profit like OpenAI compete with the compute resources of monolithic companies like Google?

2017 - Google researchers revolutionise Deep Learning

In the spring of 2017, a group of Google researchers published a landmark paper that would revolutionise Deep Learning. The paper outlined a new kind of machine learning architecture, known as the Transformer, that allowed a neural network to parse a large input of text all at once, rather than sequentially, word by word. The results were not only more efficient but more accurate by a wide margin. While Google did not immediately act upon the results, Sutskever, now lead scientist at OpenAI, suggested the company adopt this radical new approach. This was a pivotal moment for OpenAI, as the transformer architecture became the basis for their breakthrough product, the Chat Generative Pre-Trained Transformer, or for short, ChatGPT. The transformer model’s power showed that a large language model could improve endlessly, given enough data, underscoring the need for more compute.

2018 - Musk and Altman feud; OpenAI changes its mission

L-R: Elon Musk and Sam Altman Image: Vanity Fair

Concerned that Google was rapidly outpacing every other AI company, Musk proposed he take over OpenAI or absorb it into Tesla, presumably to change its non-profit status or rely on his own fortunes in order to afford the compute necessary to beat Google. When Altman and his fellow founders rejected Musk’s leadership bid, Musk abandoned the company, withdrawing a billion dollar donation he had pledged. With Musk’s finance no longer at their disposal and only a handful of donors replacing him, OpenAI quietly updated its mission statement to adapt to a for profit model. “We anticipate needing to marshal substantial resources to fulfill our mission,” they wrote on their website, “but will always diligently act to minimize conflicts of interest among our employees and stakeholders that could compromise broad benefit.”

2019 - The first ChatGPTs

From 2018 to 2019, OpenAI set to work training their first two large language models, GPT and GPT-2, on massive amounts of data. But after early tests of GPT-2, OpenAI released a paper on the dangers of the new technology, highlighting its potential to weaponise fake news. This was the first time the news cycle wrote about ChatGPT. But despite the sensational headlines, the scientific community believed OpenAI had overstated the implications of its technology.

2019 - The transformation into a for-profit venture

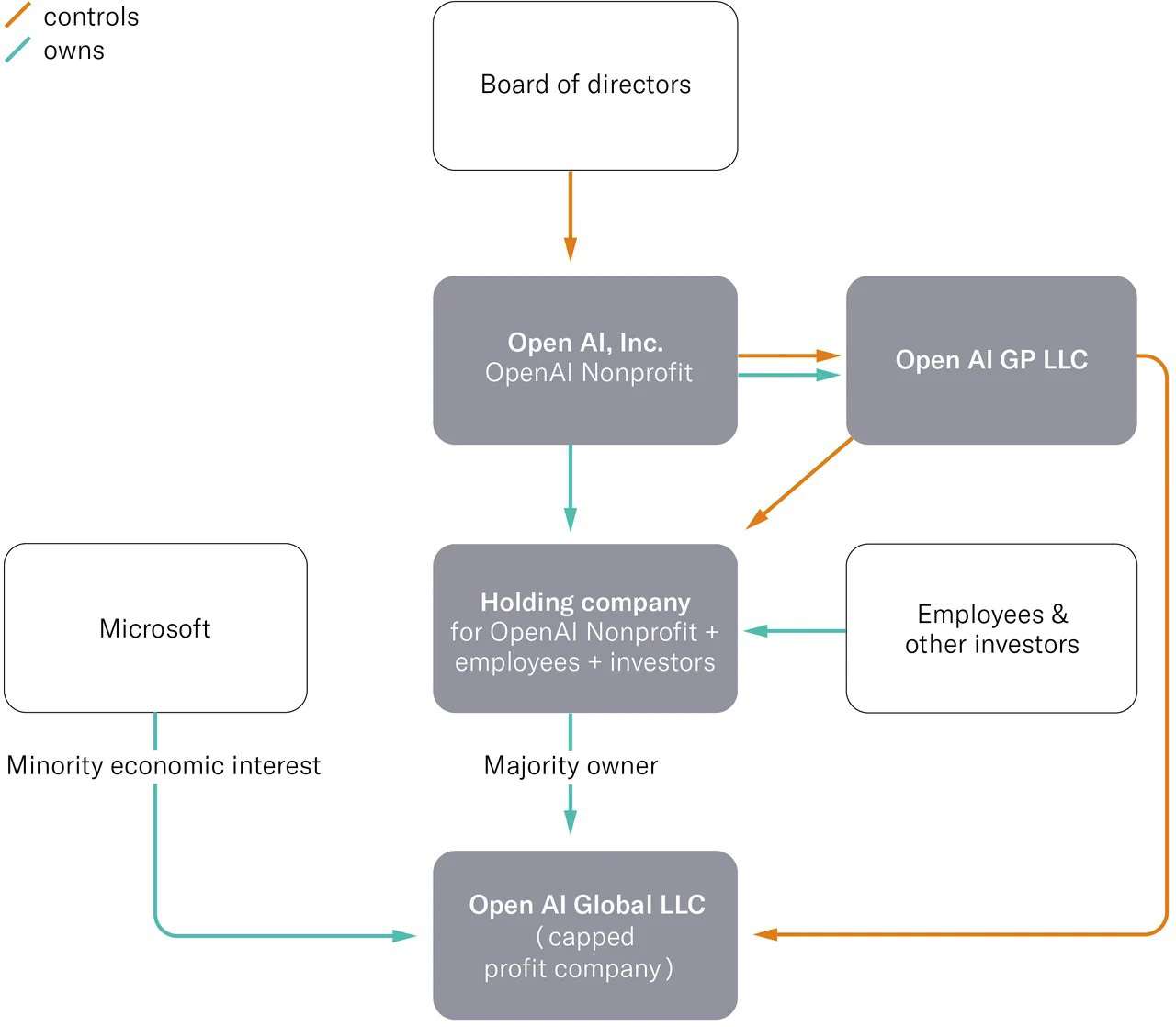

OpenAI’s hybrid profit / non-profit corporate governance structure. Image: openai.com

On March 11th, a “capped” for-profit arm of OpenAI was formally introduced. To reconcile the profit arm with its non-profit mission, the company conjured a convoluted corporate structure in which the profit arm was subsidiary and supposedly accountable to the non-profit board. On their website they justified the change as an adaptation to the evolving AI landscape: “we want to increase our ability to raise capital while still serving our mission, and no pre-existing legal structure we know of strikes the right balance”. The for-profit subsidiary would allow investors to buy equity with a cap on their returns – but the cap was 100x investors’ return, and the Observer pointed out that the cap was so high that “it might as well be non-existent”.

2019 - A billion dollar deal with Microsoft

L-R: Sam Altman and Microsoft CEO Satya Nadella. Image: Financial Times.

The new corporate governance structure opened the door to a billion dollar deal with Google’s rival Microsoft. Flush with capital, OpenAI financed a new supercomputer that could train massive transformer models, paving the way for the next generation of GPTs.

The following summer, OpenAI released ChatGPT-3. Its training used 175 billion parameters (the internal variables an AI uses to make predictions). Compared to GPT-2’s measly 774 million parameters, it was a monumental upgrade.

2020 - OpenAI Exodus over safety concerns

L-R: Sam Altman and Dario Amodei, ex OpenAI scientist and current Anthropic CEO. Image: interestingengineering.com

In late 2020, vice president of research Dario Amodei and 15 other scientists left OpenAI to start a new safety focussed AI company, Anthropic. Amodei had previously left Google and joined OpenAI in 2016 “driven by the purpose of doing safe AI research”. Dario’s sister, Daniela, who had served as VP of Safety and Policy at OpenAI later stated that the siblings left OpenAI “because of concerns around the direction. We wanted to be sure the tools were being used reliably and responsibly”.

Following the Anthropic exodus, Paul Christiano also left. Christiano was one of the key architects of Reinforcement Learning with Human Feedback, a strategy to align AI with human values. After leaving OpenAI, he set up the Alignment Research Center and is now the head of the US AI Safety Institute. With so many of the senior researchers jumping ship over safety concerns, it was clear that something was rotten in the state of OpenAI.

2022-2023 - ChatGPT is Released to the public

OpenAI released ChatGPT to the world on November 30th, 2022. The public were able to interface with the model through a web app, as part of a “research preview”. It became a viral hit. In just two months, ChatGPT hit 100 million users worldwide, shattering user update records.

On March 14, 2023, ChatGPT-4 was released. GPT-4 was trained on a much larger dataset than its predecessor and possessed an estimated 1.76 trillion parameters. It stunned users with its ability to perform graduate level reasoning, passing exams from prestigious law, medical and business schools.

A week after the release of GPT-4, an open letter was penned by the Future of Life Institute and undersigned by leading figures in tech, such as Elon Musk, Steve Wozniak (co-founder of Apple), and Yoshua Bengio (one of the three so-called Godfathers of AI). The letter called for a moratorium on Large Language Models more powerful than GPT-4, citing existential risks to humanity.

Shortly thereafter, Ilya Sutskever began an internal project dedicated to the question of how to align future AIs more powerful than GPT-4 with human values. In a public show of concern for the safety mission, Altman dedicated 20% of OpenAI’s compute resources to the team. But behind closed doors, it seemed the company was continuing to push the limits of AI capabilities. Rumours swirled later that year of a mysterious new breakthrough called Q*, with Altman teasing a panel of Google and Meta executives that he had “gotten to be in the room, when we ... push the sort of the veil of ignorance back and the frontier of discovery forward.”

Meanwhile, several researchers at OpenAI had written an anonymous letter to the board warning of “a powerful artificial intelligence discovery that they said could threaten humanity,”

Winter 2023 - Board Room Drama - Altman Fired

Sam Altman confronted by reporters after his firing. Image: The Guardian

The day after Altman made that cryptic statement to the panel of Big Tech execs, OpenAI’s non-profit board called their CEO into a private meeting room and unceremoniously fired him. Four board members orchestrated the firing: Helen Toner, Tasha McCauley, Adam D’Angelo and Ilya Sutskever. Shortly after they dismissed Altman, they removed founding member Greg Brockman from the board. In a press release, the board stated that Altman was removed for “not being consistently candid” with them. Details that led to the shock move did not emerge until the following year, when Toner revealed to the Verge that Altman had failed to tell them about a corporate venture arm, the OpenAI Startup Fund, which had quietly raised millions to invest in AI related companies. Altman had also lied to the board about the company’s safety processes on “multiple occasions”, and created a toxic atmosphere in which he would retaliate against those who criticised him.

In the wake of Altman’s dismissal, 100s of OpenAI employees vowed to resign and follow Altman to Microsoft, who was courting the now famous AI CEO. Uncertainty clouded the future of OpenAI for 4 days. But finally, the board, faced with company revolt and investor pressure, reinstated Altman. Altman quickly brought Brockman back. Unsurprisingly, the board then resigned, though Sutskever remained as head of the AI Safety team.

2024 -“Shiny New Products” and a “Reckless” Race for Dominance

L-R: Sam Altman and Ilya Sutskever Image: CNBC

After a winter of discontent, OpenAI remained publicly quiet in the dawn of 2024. But as the relative California cold turned to spring, OpenAI was hit with a lawsuit from its original founding member, Elon Musk. Musk was suing OpenAI for abandoning its founding non-profit mission to develop artificial intelligence that would benefit humanity. But OpenAI shot back by revealing emails between Musk and OpenAI that showed how Musk had himself suggested turning OpenAI into a for-profit venture back in 2018. Musk had said that OpenAI should become a “cash cow for Tesla” and that that was the only way to “hold a candle to Google”.

As Altman and Musk clashed, employees began to buck at the culture of recklessness that had taken hold of OpenAI. In April, Daniel Kokotajlo, an AI researcher who had joined the company in 2022 to work on its governance team, quit. He later told Vox, “I joined with substantial hope that OpenAI would rise to the occasion and behave more responsibly as they got closer to achieving AGI. It slowly became clear to many of us that this would not happen. I gradually lost trust in OpenAI leadership and their ability to responsibly handle AGI, so I quit.”

The following month, on May 13th, OpenAI released its upgraded ChatGPT-4 Omni, boasting benchmark busting capabilities, the capacity to “reason across voice, text and vision”, and even a voice of its own, uncannily similar to Scarlet Johansson’s.

Only two days later, Ilya Sutskever resigned. Shortly after, Jan Lieke, a lead AI researcher on the Superalignment team, also resigned. In a post on X (formerly Twitter), Lieke explained his growing disquiet with OpenAI’s decision to background safety concerns and foreground shiny products: “we are long overdue in getting incredibly serious about the implications of AGI. We must prioritise preparing for them as best we can,” he wrote. “Only then can we ensure AGI benefits all of humanity. I joined because I thought OpenAI would be the best place in the world to do this research. However, I have been disagreeing with OpenAI leadership about the company’s core priorities for quite some time, until we finally reached a breaking point.”

Image: X.com

John Schulman, one of the founding members of OpenAI, stepped in to lead the safety team. But Schulman’s tenure was not to last - days later, the entire safety team was dissolved.

2024 - "I’m scared, I’d be crazy not to be”

While the safety team was being disbanded, leaked documents obtained by Vox’s Kelsey Piper revealed that OpenAI had been forcing departing employees to sign aggressive non-disclosure agreements. Altman claimed to have no knowledge of the agreements, but further leaked emails showed that Altman had personally undersigned the agreements.

As if that were not enough of a blow to the public perception of Altman and his company, in June employees concerned about AI safety anonymously wrote to the Securities and Exchange Committee. They alleged that OpenAI was illegally attempting to stifle government oversight of its technology and that it had broken the law by seeking to undermine federally guaranteed whistleblower protections. “I’m scared. I’d be crazy not to be,” one former employee revealed to Vox.

July of this year also saw William Saunders, a safety researcher, leave OpenAI for "prioritising getting out newer, shinier products”. He compared his former employer to the Titanic because its bosses had branded it “unsinkable, but at the same time there weren't enough lifeboats for everyone and so when disaster struck, a lot of people died.”

And only a few days ago, Head of Product Peter Deng left the company after only a short stay, and founding member John Schulman jumped ship and swam to Anthropic, where so many of his previous teammates have ended up. Founding member Greg Brockman, who was ousted and reinstated with Altman in the winter of 2023, has gone on a 1 year sabbatical.

What next for OpenAI?

One wonders if Musk and Altman's concern for AI safety was ever sincere or whether it was merely a disguise for their ambition to outmanoeuvre Google. Details of Musk's grudge against Google were revealed over the course of the lawsuits he brought against OpenAI. Despite his allegations that OpenAI betrayed its original non-profit mission, Musk himself was all too ready to discard the non-profit model in order to beat Google. Altman’s true intentions are more vague, but there is no doubt that he is ambitious. The question is, how much does Altman prize his own ambitions over the lives of others? The technology OpenAI has built has Altman’s employees fleeing to safer waters. But Altman, in his own words, isn’t afraid of the end of the world: “I have guns, gold, potassium iodide, antibiotics, batteries, water, gas masks from the Israeli Defense Force, and a big patch of land in Big Sur I can fly to” he said during a New Yorker interview back in 2016.

A less cynical view might read OpenAI’s history as one of practical adaptations to a changing landscape – that’s certainly how OpenAI always framed its shape-shifting. As machine learning evolved, the commodity of compute became king and forced OpenAI to unmoor itself from its non-profit mission. According to this argument, there is no way to build a state-of-the-art AI company without the ability to brute force petabytes (1000 terabytes) of data through powerful supercomputers. Those resources require capital. And lots of it.

Even before OpenAI released ChatGPT to the public and became a worldwide sensation, tactics of manipulation were evident: “It leans into hype in its rush to attract funding and talent, guards its research in the hopes of keeping the upper hand, and chases a computationally heavy strategy—not because it’s seen as the only way to AGI, but because it seems like the fastest” wrote MIT’s Karen Hao in 2020 after spending 3 days at OpenAI.

But perhaps a non-profit approach could still work. There is an opportunity to coordinate international efforts to build safe artificial intelligence, relying on collective resources. A global AI treaty, perhaps similar to the nuclear non-proliferation treaties that came out of the Cold War and the horrors of the Hiroshima and Nagasaki bombings, could ensure oversight.

What will become of other AI ventures that have risen to prominence, like Anthropic? Dario Amodei’s company now rivals OpenAI in terms of performance, and its mission is to ensure “transformative AI helps people and society flourish.” But OpenAI was born of the same noble sentiments – can we trust Anthropic to avoid the same pitfalls and resist the siren call of profit? Amodei says we shouldn't trust any of the companies in the current landscape: “Look at all the companies out there. Who can you trust? It’s a very good question. The broader societal question is, is A.I. so big that there needs to be some kind of democratic mandate on the technology?…We need to put positive pressure on this industry to always do the right thing for our users.”

Will market forces inevitably compromise private companies’ ability to build AI safely? Or does OpenAI’s transformation from saviour startup to Shoggoth reflect character flaws in its leadership? OpenAI is Altman and Altman is OpenAI. Since he was reinstated he has consolidated power and removed detractors. The rest are fleeing for the life preservers. Are they fleeing Altman himself, or what he’s asking his company to create? Whatever the answers, the doors are closed at OpenAI.

One of the best summaries I read so far on OpenAI. Well done!

Since it happened a week ago, you might also want to mention Musk's second go at the suit. See e.g. https://archive.ph/qhl69