PauseAI Newsletter #7: October 24, 2024

Updates from our global volunteer events, AI and the Nobel Prize, and calls to action.

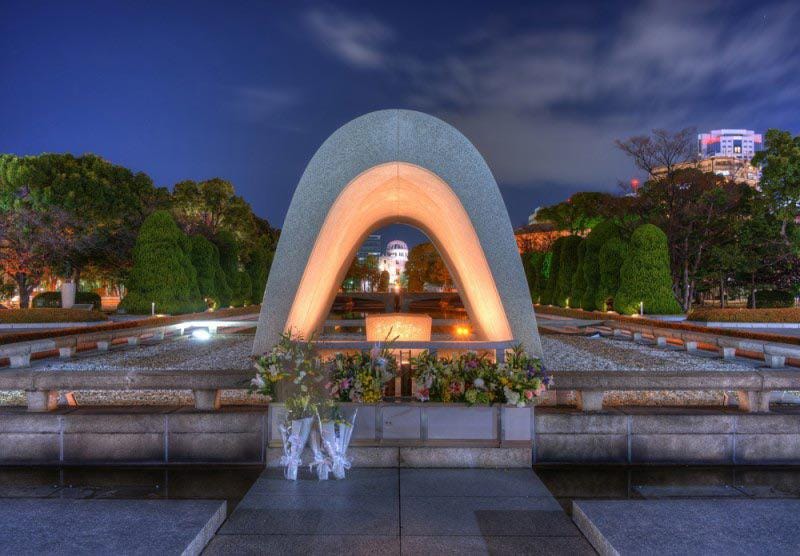

Image: the Flame of Peace in Hiroshima. The flame has burned continuously for 60 years, and will remain lit until the Earth is free from the threat of nuclear annihilation.

Updates from PauseAI activities:

First Friday Flyering: the beginning of a global heartbeat.

Earlier this month, PauseAI volunteers from 9 cities around the world handed out flyers, talked to the public about the risks of AI and the need for a global pause, and recruited passersby to join the movement.

This marked the premier session of First Friday Flyering. The idea is simple: on the first Friday of every month, the PauseAI movement will fan out in cities around the world, increasing public awareness and recruiting new members to join our cause. This initiative will also serve as a “monthly heartbeat” for the movement, giving volunteers the chance to coordinate on a global initiative.

Our movement takes inspiration from Fridays for the Future, also known as the School Strike for Climate. This started in August 2018, when a young Swedish student named Greta Thunberg walked out of school to protest government inaction on climate change. One year later, in September 2019, the movement had grown to the largest climate strike in world history, with 4 million participants in 150 countries.

What began as one brave student walking out of class turned into a global movement. We can learn much from her example.

Over time, consistent monthly flyering will allow us to grow, recruit more members into our local groups, educate more people about the risks of AI, communicate the need for a pause, and ultimately become too big to ignore.

If you’d like to participate, please check out our Discord server and events page for more information, or contact joep@pauseai.info.

Upcoming events:

We have lots planned for the next month, including a global protest on November 20-21 coinciding with the AI Safety Conference.

For future events, please check out our events page on Luma. If you’d like to plan an event, add your own!

AI at a glance: The Nobel Prize

The theme of this year’s Nobel prizes was clear: AI. The Nobel prize for Physics was awarded to Geoffrey Hinton and John Hopfield for their work on Machine Learning. Hinton believes that a catastrophic outcome from AI is quite probable, giving it “about 50/50” likelihood; he left Google in 2023 to speak openly about these risks. John Hopfield shares his worries, agreeing that we could lose control over AI models. Hopfield was one of the 30,000 people who signed the Future of Life Institute’s Pause letter last year, which as you might assume, played an important role in inspiring us to start PauseAI.

DeepMind CEO Demis Hassabis was another Nobel prize winner this year, sharing the Chemistry prize for work using AI to design and predict protein structures. He’s been concerned about AI ending humanity for a long time - he famously warned Elon Musk about AI risk back in 2012, which seems to have played an important role in OpenAI being founded years later (hindsight, of course, is 20:20).

It is also worth considering the recipients of the Nobel Peace Prize: The Japan Confederation of A- and H-bomb Sufferers Organizations is a group of activists who survived the bombings of Hiroshima and Nagasaki. They have spent years raising awareness of the horrors of nuclear weapons and have led campaigns for nuclear weapons abolition. While this is not directly related to AI, the parallels are strong. These are people who suffered firsthand the effects of a devastating new technology, which to this day threatens to destroy civilization, and who will not stop until the world is safe. We can take inspiration from them.

What we’ve been reading:

A Narrow Path. This proposal, among the most comprehensive to date, outlines a three-phase plan for achieving a world in which safe transformative AI can be harnessed for the benefit of humanity. Phase 0 of this plan calls for (among many other things) a moratorium on the development of superintelligent AI, with international coordination and a global treaty to achieve this outcome. We see pausing frontier AI development as one piece of the puzzle – a good first step – and A Narrow Path offers a wider view.

Is Pausing AI Possible? by Richard Annilo. This post discusses the feasibility of an international pause on frontier AI development. It covers historical case studies, public opinion, the AI safety landscape in other countries, and other topics to show that pausing may indeed be a viable option.

AI Endgame. This newsletter, written by researcher and investigative journalist Debbie Coffey, covers regular developments in the world of AI and actions you can take to save the world.

Call to action: how you can get involved!

Organize a Flyering Session in your community. Our next global event will be on Friday, November 8, with volunteers around the world participating. If you’d like to participate, please check out our events page for more information and contact joep@pauseai.info.

Take 5 minutes to write to your politicians about AI risk and the need for a Pause. This can have a massive outsized impact.

PauseAI is hiring for a Global Organizing Director. Please consider sharing our vacancy with your network and anyone who might be interested!